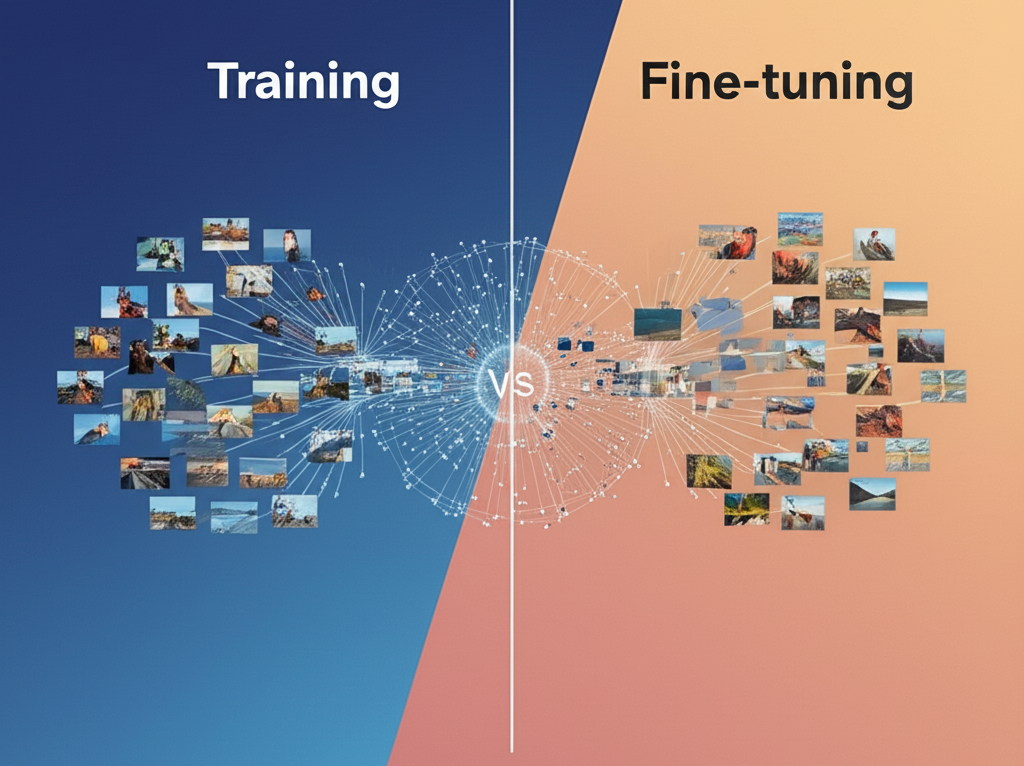

Training vs. Fine-Tuning: Optimizing Your Image Recognition Models

When it comes to building image recognition models, there’s no one-size-fits-all solution. Developers and businesses often face a crucial decision: should they train a model from scratch or fine-tune an existing pre-trained model? Both approaches have unique advantages and challenges. Knowing when to use each can save time, reduce costs, and ultimately improve the accuracy of your models.

Training from Scratch

Training a model from scratch means starting with a neural network that has randomly initialized weights and letting it learn everything solely from your own dataset. This approach provides full control over the model’s architecture and behavior, making it ideal for highly specialized applications.

Advantages of Training from Scratch:

- Complete customization of model design.

- Eliminates the risk of inheriting bias from other datasets.

- Better suited for very niche problems with no suitable pre-trained models.

Challenges:

- Requires vast amounts of labeled data to perform well.

- Computationally expensive, often needing days or weeks of training on powerful hardware.

- Higher risk of overfitting if the dataset is limited in size.

When to Choose This Approach:

If your task is highly specific, and no pre-trained models align well with it — or if you need the utmost control and accuracy — training from scratch might be the best choice. Just ensure you have enough data and computing resources to support it.

Fine-Tuning Pre-Trained Models

Fine-tuning leverages models already trained on massive datasets like ImageNet. Instead of learning everything anew, these models adapt their learned features to your particular task. This makes fine-tuning faster, more data-efficient, and less resource-intensive.

Benefits of Fine-Tuning:

- Achieves strong performance with significantly less data.

- Faster training times compared to starting from zero.

- Ideal when hardware and time are limited.

Potential Drawbacks:

- May not be as effective if your task differs greatly from the original training data.

- Requires careful handling to avoid “catastrophic forgetting,” where the model loses previously learned knowledge.

Ideal Use Cases:

Fine-tuning is typically the go-to for most practical image recognition tasks, especially if your dataset is small to medium-sized. It’s great for applications involving common visual features such as object detection, product identification, or facial recognition.

Best Practices for Fine-Tuning

To get the most out of fine-tuning, consider these tips:

- Freeze Early Layers: Early layers detect general features like edges and textures, which are often transferable. Freezing them helps preserve useful knowledge.

- Use Learning Rate Scheduling: Start with a low learning rate to gently adjust weights without losing prior learning.

- Data Augmentation: Use techniques like rotation, cropping, and flipping to artificially expand your dataset and improve generalization.

The Hybrid Approach

Sometimes, a combined strategy works best. You might start by fine-tuning only the final layers of a pre-trained model, then gradually unfreeze more layers and continue training. This hybrid method balances speed and performance and often leads to more robust models.

Conclusion

Both training from scratch and fine-tuning have their place in image recognition projects. Training from scratch offers full flexibility but demands significant data and computational power. Fine-tuning provides a faster, more efficient route with some compromises in specificity.

For most real-world applications, fine-tuning strikes the perfect balance—especially when paired with best practices and quality data. Ultimately, your choice should align with your project’s goals, data availability, and resource constraints.

By understanding the strengths and limitations of both approaches, you can optimize your image recognition models for success.